Wednesday, November 17, 2010

Spring NamespaceHandler debugging

The net is littered with threads about this exception, all of which end with "put the spring jars in your WEB-INF/lib directory".

I was fairly determined not to do this, as I frequently start a webapp with an embedded instance of Jetty, and use the Eclipse project's classpath for all of the dependencies. Since all of the jars are from the project's classpath, the webapp is always using exactly the same jars that Eclipse is for compiling your code, so you never have the two drift apart.

Too many times, after copying jars to WEB-INF/lib and forgetting about them, I'll upgrade a library, everything compiles fine, but spend an embarrassing amount of time wondering why it's not working in the webapp, before remembering the stale jar in the WEB-INF/lib directory.

Anyway, the real cause of the NamespaceHandler exception in my case was a buggy ClassLoader.getResources implementation.

The way spring works, when doing it's XML parsing/whatever magic, is when it sees "xmlns:tx=...", it wants a new NamespaceHandler that knows how to handle those tags.

To allow extensible NamespaceHandlers, Spring uses ClassLoader.getResources("META-INF/spring.handers") to get a list of all of the spring.handlers files across all of the jar files in the classloader. So if spring-core, spring-tx, spring-etc. all have spring.handlers with NamespaceHandlers in them, each file gets found and loaded.

Here's the rub: whatever Eclipse project classloader that had spring-tx on it only returned spring.handlers files from jars that already had classes loaded from them. The ClassLoader.getResources implementation would not look into jar files that was on its classpath, but had not yet been opened for loading classes from.

Of all things, adding:

Class.forName("org.springframework.transaction.support.TransactionTemplate");

Before spring was initialized fixed the NamespaceHandler error. Everything boots up correctly now.

While it took way too long to figure out, I'm pleased that I can continue using the Eclipse project classloader for the webapp I'm starting and avoid the annoying "copy jars to WEB-INF/lib" solution.

I'd like to know which classloader had the buggy getResources implementation, but I've already spent too much time on this so far.

Friday, November 12, 2010

CSV and Hive

CSV

Anyone who's ever dealt with CSV files knows how much of a pain the format actually is to parse. It's not as simple as splitting on commas -- the fields might have commas embedded in them, so, okay you put quotes around the field... but what if the field had quotes in it? Then you double up the quotes... "okay, ""great""" -- that was a single CSV field.We normally use the excellent opencsv (apache2 licensed) library to deal with CSV files.

Hive

We love Hive. Almost all of our reporting is written as Hive scripts. How do you deal with CSV files with Hive? If you know for sure your fields don't have any commas in them, you can get away with the delimited format. There's the RegexSerDe, but as mentioned the format is non-trivial, and you need to change the regex string depending on how many columns you are expecting.CSVSerde

Enter the CSVSerde. It's a Hive SerDe that uses the opencsv parser to serialize and deserialize tables properly in the CSV format.Using it is pretty simple:

add jar path/to/csv-serde.jar;

create table my_table(a string, b string, ...)

row format serde 'com.bizo.hive.serde.csv.CSVSerde'

stored as textfile

;

This is my first time writing a Hive SerDe. There were a couple of road bumps, but overall I was surprised with how easy it was. I mostly just followed along with RegexSerDe.

I'm sure there are a lot of ways it could be improved, so I'd appreciate any feedback or comments on how to make it better.

Source.

Binary (jar packaged with opencsv).

Tuesday, October 26, 2010

Rolling out to 4 Global Regional Datacenters in 25 minutes

Thursday, October 21, 2010

An experiment in file distribution from S3 to EC2 via bittorrent

One difficulty with this approach is that your response time is strictly bounded by the time it takes for you to spin up a new instance with your application running on it. This isn't a big deal for most servers, but some of our backend systems need multi-GB databases and indexes loaded onto them at startup.

There are several strategies for working around this, including baking the indexes into the AMI and distributing them via EBS volume; however, I was intrigued by the possibility of using S3's bittorrent support to enable peer-to-peer downloads of data. In an autoscaling situation, there are presumably several instances with the necessary data already running, and using bittorrent should allow us to quickly copy that file to a new instance.

Test setup:

All instances were m1.smalls running Ubuntu Lucid in us-east-1, spread across two availability zones. The test file was a 1GB partition of a larger zip file.

For a client, I used the version of Bittornado available in the standard repository (apt-get install -y bittornado). Download and upload speeds were simply read off of the curses interface.

For reference, I clocked a straight download of this file directly from S3 as taking an average of 57 seconds, which translates into almost 18 MB/s.

Test results:

First, I launched a single instance and started downloading from S3. S3 only gave me 70-75KB/s, considerably less than direct S3 downloads.

As the first was still downloading, I launched a second instance. The second instance quickly caught up to the first, then the download rate on each instance dropped to 140-150KB/s with upload rates at half that. Clearly, what was going on was S3 was giving each instance 70-75KB/s of bandwidth, and the peers were cooperating by sharing their downloaded fragments.

To verify this behavior, I then launched two more instances and hooked them into the swarm. Again, the new peers quickly caught up to the existing instances, and download rates settled down to 280-300KB/s on each of the four instances.

So, there's clearly some serious throttling going on when downloading from S3 via bittorrent. However, the point of this experiment is not the S3 -> EC2 download speed but the EC2 <-> EC2 file sharing speed.

Once all four of these instances were seeding, I added a fifth instance to the swarm. Download rates on this instance maxed out at around 12-13 MB/s. Once this instance was seeding, I added a sixth instance to the swarm to see if bandwidth would continue to scale up, but I didn't see an appreciable difference.

So, it looks like using bittorrent within EC2 is actually only about 2/3rds as fast as downloading directly from S3. In particular, even with a better tuned environment (eg, moving to larger instances to eliminate sharing physical bandwidth with other instances), it doesn't look like we would get any significant decreases in download times by using bittorrent.

Friday, October 1, 2010

Killing java processes

It gets tiring to write,

$ jps -lvfollowed by,

48231 /opt/eclipse-3.5.1/org.eclipse.equinox.launcher_1.0.201.jar -Xmx1024m

10258 /opt/boisvert/jedit-4.3.2/jedit.jar -Xmx192M

5295 sun.tools.jps.Jps -Dapplication.home=/opt/boisvert/jdk1.6.0_21 -Xms8m

$ kill 48231You know, with the cut & paste in-between ... so I have this Ruby shell script called

killjava, a close cousin of killall:$ killjava -hthat does the job. It's not like I use it everyday but everytime I use it, I'm glad it's there.

killjava [-9] [-n] [java_main_class]

-9, --KILL Send KILL signal instead of TERM

-n, --no-prompt Do not prompt user, kill all matching processes

-h, --help Show this message

Download the script from Github (requires Ruby and UNIX-based OS).

modern IDEs influencing coding style?

It would be nice if globals, locals, and members could be syntax colored differently. That would be better than g_ and m_ prefixes.

I saw this from John Carmack last week and thought, what a great idea! It seems very natural and easy to do and makes a lot more sense than crazy prefix conventions. I've been mostly programming in Java, so the conventions are a little different, but I'd love it if we could get rid of using redundant "this" qualifiers to signal member variables, and the super ugly ALL_CAPS for constants... it just seems so outdated.

Eclipse actually provides this kind of highlighting already:

Notice that the member variable "greeting" is always in blue, while the non-member variables are never highlighted. Also, the public static constant "DEFAULT_GREETING" is blue and italicized.

Notice that if you rename DEFAULT_GREETING, it's still completely recognizable as a constant:

I think it's interesting that modern IDEs are able to give us so much more information about the structure of our programs. Stuff you used to have to explicitly call out via conventions like these. How long until we're ready to make the leap and change our code conventions to keep up with our tools?

The main argument against relying on tools to provide this kind of information is that not all tools have caught up. I'm not sure I completely buy this. Hopefully you're not actually remotely editing production code in vi or something. There are a lot of web apps for viewing commits and performing code reviews, and they're unlikely to be as fully featured as your favorite IDE. Still, the context is often limited enough to avoid confusion, and the majority of our time is spent in our IDEs anyway.

So, can we drop the ALL_CAPS already?

Wednesday, September 29, 2010

emr: Cannot run program "bash": java.io.IOException: error=12, Cannot allocate memory

java.io.IOException: Task: attempt_201007141555_0001_r_000009_0 - The reduce copier failed

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:384)

at org.apache.hadoop.mapred.Child.main(Child.java:170)

Caused by: java.io.IOException: Cannot run program "bash": java.io.IOException: error=12, Cannot allocate memory

at java.lang.ProcessBuilder.start(ProcessBuilder.java:459)

at org.apache.hadoop.util.Shell.runCommand(Shell.java:149)

at org.apache.hadoop.util.Shell.run(Shell.java:134)

at org.apache.hadoop.fs.DF.getAvailable(DF.java:73)

at org.apache.hadoop.fs.LocalDirAllocator$AllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:329)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:124)

at org.apache.hadoop.mapred.MapOutputFile.getInputFileForWrite(MapOutputFile.java:160)

at org.apache.hadoop.mapred.ReduceTask$ReduceCopier$InMemFSMergeThread.doInMemMerge(ReduceTask.java:2622)

at org.apache.hadoop.mapred.ReduceTask$ReduceCopier$InMemFSMergeThread.run(ReduceTask.java:2586)

Caused by: java.io.IOException: java.io.IOException: error=12, Cannot allocate memory

at java.lang.UNIXProcess.(UNIXProcess.java:148)

at java.lang.ProcessImpl.start(ProcessImpl.java:65)

at java.lang.ProcessBuilder.start(ProcessBuilder.java:452)

... 8 more

There's some discussion on this thread in the emr forums.

From Andrew's response to the thread:

The issue here is that when Java tries to fork a process (in this case bash), Linux allocates as much memory as the current Java process, even though the command you are running might use very little memory. When you have a large process on a machine that is low on memory this fork can fail because it is unable to allocate that memory.

The workaround here is to either use an instance with more memory (m2 class), or reduce the number of mappers or reducers you are running on each machine to free up some memory.

Since the task I was running was reduce heavy, I chose to just drop the number of mappers from 4 to 2. You can do this pretty easy with the emr bootstrap actions.

My job ended up looking something like this:

elastic-mapreduce --create --name "awesome script" \

--num-instances 8 --instance-type m1.large \

--hadoop-version 0.20 \

--args "-s,mapred.tasktracker.map.tasks.maximum=2" \

(relevant parts highlighted).

Tuesday, September 21, 2010

Salesforce and DART Synchronization

- It’s a tool that I could see being used in our live Salesforce instance.

- It seems like a typical use case for extending Salesforce (i.e. integrating with a 3rd party SOAP service).

At a high-level, I wanted to call DART’s DFP API from within Salesforce and then update an Account object in Salesforce with the Advertiser Id returned from DART. However, I first needed to authenticate with Google’s ClientLogin service in order to get an authentication token for calling the DFP API.

APEX

APEX is the programming language that allows a developer to customize a Salesforce installation. APEX’s syntax, not surprisingly, is very similar to Java. The really interesting thing is that none of the code you write actually compiles or runs on your machine. All compilation and execution happen “in the cloud”.

DART Integration

Salesforce has a strict security model. In order to make a request to a Web Service you actually need to configure any URLs you are accessing as a Remote Site. Instructions for doing this can be found here. For this project, I simply needed to add https://www.google.com as a Remote Site.

There are a couple of options for calling a Web Service via APEX:

- Use the Http/HttpRequest/Http APEX classes. These are useful for calling REST style services.

- Import a WSDL and use the generated code to make a SOAP request.

Here is the APEX code I developed for calling Google’s ClientLogin authentication service:

public class GoogleAuthIntegration {

private static String CLIENT_AUTH_URL = 'https://www.google.com/accounts/ClientLogin';

// login to google with the given email and password

public static String performClientLogin(final String email, final String password) {

final Http http = new Http();

final HttpRequest request = new HttpRequest();

request.setEndpoint(CLIENT_AUTH_URL);

request.setMethod('POST');

request.setHeader('Content-type', 'application/x-www-form-urlencoded');

final String body = 'service=gam&accountType=GOOGLE&' + 'Email=' + email + '&Passwd=' + password;

request.setBody(body);

final HttpResponse response = http.send(request);

final String responseBody = response.getBody();

final String authToken = responseBody.substring(responseBody.indexOf('Auth=') + 5).trim();

System.debug('authToken is: ' + authToken);

return authToken;

}

}

Salesforce provides a way to import a WSDL file via its Admin UI. It then parses and generates APEX code that allows you to call methods exposed by the WSDL. However, when I tried importing DFP’s Company Service WSDL, I ran into some errors:

It turns out that the WSDL contains an element named ‘trigger’ and trigger is a reserved APEX keyword. In any event, I ended up copy/pasting the generated code and fixing it so that it compiled correctly (I also ran into a problem where generated exception classes were not extending Exception).

Once the code to call the DFP Company Service was compiling, I created an APEX controller to perform the update on an Account record.

public class SyncDartAccountController {

private final Account acct;

public SyncDartAccountController(ApexPages.StandardController stdController) {

this.acct = (Account) stdController.getRecord();

}

// Code we will invoke on page load.

public PageReference onLoad() {

String theId = ApexPages.currentPage().getParameters().get('id');

if (theId == null) {

// Display the Visualforce page's content if no Id is passed over

return null;

}

// get authToken for DFP API requests

String authToken = GoogleAuthIntegration.performClientLogin('xxx@xxx.com', 'xxxx');

// get Account with the given id

for (Account o:[select id, name from Account where id =:theId]) {

DartCompanyService.CompanyServiceInterfacePort p = new DartCompanyService.CompanyServiceInterfacePort();

p.RequestHeader = new DartCompanyService.SoapRequestHeader();

p.RequestHeader.applicationName = 'sampleapp';

// prepare the DFP query and execute

DartCompanyService.Statement filterByNameAndType = new DartCompanyService.Statement();

filterByNameAndType.query = 'WHERE name = \'' + o.Name + '\' and type = \'ADVERTISER\'';

DartCompanyService.CompanyPage page = p.getCompaniesByStatement(filterByNameAndType);

if (page.totalResultSetSize > 0) {

// update the record if we get a result

o.Dart_Advertiser_Id__c = page.results.get(0).id;

update o;

}

}

// Redirect the user back to the original page

PageReference pageRef = new PageReference('/' + theId);

pageRef.setRedirect(true);

return pageRef;

}

}Then, I created a simple Visuaforce page to invoke the controller:

<apex:page standardController="Account" extensions="SyncDartAccountController" action="{!onLoad}">

<apex:sectionHeader title="Auto-Running Apex Code"/>

<apex:outputPanel >

You tried calling Apex Code from a button. If you see this page, something went wrong.

You should have been redirected back to the record you clicked the button from.

</apex:outputPanel>

</apex:page>

1) Click on ‘Buttons and Links’:

2) Click New:

3) Enter the info for the new button:

4) After clicking on Save, we can add the button to the Account page layout. The final result:

Final Thoughts

This was my first foray into APEX programming in Salesforce and I was pleased with the overall set of tools and ability to be productive quickly. The only hiccup I encoutered was in the WSDL generation step and this issue was fairly easy to overcome. There are good developer docs and there are ways to add debug logging (which I didn’t go over) as well as a framework for unit testing.

Monday, September 20, 2010

quick script: emr-mailer

The script will download files from an s3 url, concatenate them together, zip up the results and send it as an attachment to a specified email address. It sends email through smtp.mail.com, using account credentials you specify.

I wanted to make it easy to just append an additional step to any existing job, not requiring any additional machine setup or dependencies. I was able do this by making use of amazon's script-runner (s3://us-east-1.elasticmapreduce/libs/script-runner/script-runner.jar). The script-runner.jar step will let you execute an arbitrary script from a location in s3 as an emr job step.

As I mentioned, the intended usage is to run it as a job step with your hive script, passing it in the location of the resulting report.

E.g.:

elastic-mapreduce --create --name "my awesome report ${MONTH}" \

--num-instances 10 --instance-type c1.medium --hadoop-version 0.20 \

--hive-script --arg s3://path/to/hive/script.sql \

--args -d,MONTH=${MONTH} --args -d,START=${START} --args -d,END=${END} \

--jar s3://us-east-1.elasticmapreduce/libs/script-runner/script-runner.jar \

--args s3://path/to/emr-mailer/send-report.rb \

--args -n,report_${MONTH} --args -s,"my awesome report ${MONTH}" \

--args -e,awesome-reports@company.com \

--args -r,s3://path/to/report/results

Above you can see I'm starting a hive report as normal, then simply appending the script-runner step, calling the emr-mailer send-report.rb, telling it where the report will end up, and details about the email.

The full source code is available on github as emr-mailer.

The script is pretty simple, but let me know if you have any suggestions for improvements or other feedback.

Friday, August 13, 2010

Collecting User Actions with GWT

While I was at one of the Google I/O GWT sessions (courtesy of Bizo), a Google presenter mentioned how one of their internal GWT applications tracks user actions.

The idea is really just a souped-up, AJAX version of server-side access logs: capturing, buffering, and sending fine-grained user actions up to the server for later analysis.

The Google team was using this data to make A/B-testing-style decisions about features–which ones were being used, not being used, tripping users up, etc.

I thought the idea was pretty nifty, so I flushed out an initial implementation in BizAds for Bizo’s recent hack day. And now I am documenting my wild success for Bizo’s first post-hack-day “beer & blogs” day.

No Access Logs

Traditional, page-based web sites typically use access logs for site analytics. For example, the user was on a.html, then b.html. Services like Google Analytics can then slice and dice your logs to tell you interesting things.

However, desktop-style one-page webapps don’t generate these access logs–the user is always on the first page–so they must rely on something else.

This is pretty normal for AJAX apps, and Google Analytics already supports it via its asynchronous API.

We had already been doing this from GWT with code like:

public native void trackInGA(final String pageName) /*-{

$wnd._gaq.push(['_trackPageview', pageName]);

}-*/;And since we’re using a MVP/places-style architecture (see gwt-mpv), we just call this on each place change. Done.

Google Analytics is back in action, not a big deal.

Beyond Access Logs

What was novel, to me, about this internal Google application’s approach was how the tracked user actions were much more fine-grained than just “page” level.

For example, which buttons the user hovers over. Which ones they click (even if it doesn’t lead to a page load). What client-side validation messages are tripping them up. Any number of small “intra-page” things that are nonetheless useful to know.

Obviously there are a few challenges, mostly around not wanting to detract from the user experience:

- How much data is too much?

Tracking the mouse over of every element would be excessive. But the mouse over of key elements? Should be okay.

- How often to send the data?

If you wait too long while buffering user actions before uploading them to the server, the user may leave the page and you’ll lose them. (Unless you use a page unload hook, and the browser hasn’t crashed.)

If you send data too often, the user might get annoyed.

The key to doing this right is having metrics in place to know whether you’re prohibitively affecting the user experience.

The internal Google team had these metrics for their application, and that allowed them to start out batch uploading actions every 30 seconds, then every 20 seconds, and finally every 3 seconds. Each time they could tell the users’ experience was not adversely affected.

Unfortunately, I don’t know what exactly this metric was (I should have asked), but I imagine it’s fairly application-specific–think of GMail and average emails read/minute or something like that.

Implementation

I was able to implement this concept rather easily, mostly by reusing existing infrastructure our GWT application already had.

When interesting actions occur, I have the presenters fire a generic UserActionEvent, which is generated using gwt-mpv-apt from this spec:

@GenEvent

public class UserActionEventSpec {

@Param(1)

String name;

@Param(2)

String value;

@Param(3)

boolean flushNow;

}Initiating the tracking an action is now just as simple as firing an event:

UserActionEvent.fire(

eventBus,

"someAction",

"someValue",

false);I have a separate, decoupled UserActionUploader, which is listening for these events and buffers them into a client-side list of UserAction DTOs:

private class OnUserAction implements UserActionHandler {

public void onUserAction(final UserActionEvent event) {

UserAction action = new UserAction();

action.user = defaultString(getEmailAddress(), "unknown");

action.name = event.getName();

action.value = event.getValue();

actions.add(action);

if (event.getFlushNow()) {

flush();

}

}

}UserActionUploader sets a timer that every 3 seconds calls flush:

private void flush() {

if (actions.size() == 0) {

return;

}

ArrayList<UserAction> copy =

new ArrayList<UserAction>(actions);

actions.clear();

async.execute(

new SaveUserActionAction(copy),

new OnSaveUserActionResult());

}The flush method uses gwt-dispatch-style action/result classes, also generated by gwt-mpv-apt, to the server via GWT-RPC:

@GenDispatch

public class SaveUserActionSpec {

@In(1)

ArrayList<UserAction> actions;

}This results in SaveUserActionAction (okay, bad name) and SaveUserActionResult DTOs getting generated, with nice constructors, getters, setters, etc.

On the server-side, I was able to reuse an excellent DatalogManager class from one of my Bizo colleagues (unfortunately not open source (yet?)) that buffers the actions data on the server’s hard disk and then periodically uploads the files to Amazon’s S3.

Once the data is in S3, it’s pretty routine to setup a Hive job to read it, do any fancy reporting (grouping/etc.), and drop it into a CSV file. For now I’m just listing raw actions:

-- Pick up the DatalogManager files in S3

drop table dlm_actions;

create external table dlm_actions (

d map<string, string>

)

partitioned by (dt string comment 'yyyyddmmhh')

row format delimited

fields terminated by '\n' collection items terminated by '\001' map keys terminated by '\002'

location 's3://<actions-dlm-bucket>/<folder>/'

;

alter table dlm_actions recover partitions;

-- Make a csv destination also in S3

create external table csv_actions (

user string,

action string,

value string

)

row format delimited fields terminated by ','

location 's3://<actions-report-bucket/${START}-${END}/parts'

;

-- Move the data over (nothing intelligent yet)

insert overwrite table csv_actions

select dlm.d["USER"], dlm.d["ACTION"], dlm.d["VALUE"]

from dlm_actions dlm

where

dlm.dt >= '${START}00' and dlm.dt < '${END}00'

;Then we use Hudson as a cron-with-a-GUI to run this Hive script as an Amazon Elastic Map Reduce job once per day.

Testing

Thanks to the awesomeness of gwt-mpv, the usual GWT widgets, GWT-RPC, etc., can be doubled-out and testing with pure-Java unit tests.

For example, a method from UserActionUploaderTest:

UserActionUploader uploader = new UserActionUploader(registry);

StubTimer timer = (StubTimer) uploader.getTimer();

@Test

public void uploadIsBuffered() {

eventBus.fireEvent(new UserActionEvent("someaction", "value1", false));

eventBus.fireEvent(new UserActionEvent("someaction", "value2", false));

assertThat(async.getOutstanding().size(), is(0)); // buffered

timer.run();

final SaveUserActionAction a1 = async.getAction(SaveUserActionAction.class);

assertThat(a1.getActions().size(), is(2));

assertAction(a1, 0, "anonymous", "someaction", "value1");

assertAction(a1, 1, "anonymous", "someaction", "value2");

}The usual GWT timers are stubbed out by a StubTimer, which we can manually tick via timer.run() to deterministically test timer-delayed business logic.

That’s It

I can’t say we have made any feature-altering decisions for BizAds based on the data gathered from this approach yet–technically its not live yet. But it’s so amazing that surely we will. Ask me about it sometime in the future.

hackday: analog meters

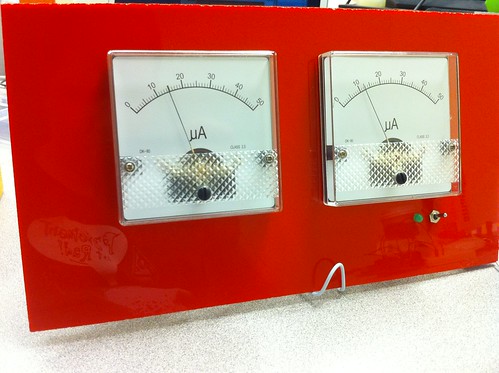

I've always wanted to have some cool old-school analog VU type meters displaying web requests.

Here's my completed hackday project:

Here's a view of the components from the back:

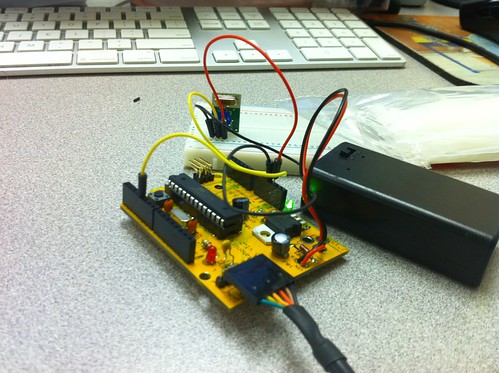

It's battery operated and receives data wirelessly over RF from another arduino I have hooked up via serial to my laptop.

It's pretty simple, but I'm still totally psyched about how it came out.

The main components are some analog panel meters (kinda pricey, but awesome), and an RF receiver. The frame is a piece of scrap acrylic from TAP Plastics that I drilled and cut to size, and the stand is a piece of a wire clothes hanger bent to shape.

Connected to my computer is a another arduino (actually a volksduino) that receives updates over USB and sends the data out over RF:

You may be asking, why bother with wireless if you need a computer hooked up through serial anyway. Or you may ask why not just connect to a wireless network directly.

Well, I wanted the meters to be able to be moved around, or mounted on a wall... I wanted them wireless. But, it turns out that wireless and even ethernet solutions for connecting an arduino to the internet directly are comparatively pretty expensive. Even using bluetooth is expensive. My long term plan is to have a single arduino connected to the internet directly (via ethernet or wireless), and have it serve as a proxy over RF for the others... So this is a bit of work towards that.

I wrote a bit of Java code to connect to amazon's cloudwatch to pull the load balancer statistics for two of our services. I then discovered it's near impossible to connect to anything over USB in Java... It is ridiculous. Luckily, it's REALLY easy to do this with Processing, so I wrote a simple processing program that used my cloudwatch library and wrote it out to serial.

And that's really it. The arduino reads data over serial, and periodically sends it over RF. The arduino hooked up to the meters simply reads the values over RF and sets the meters to display a scaled version of the results. They're showing requests per second. We get a huge amount of requests per second with these services, so the numbers on the dial aren't actually correct (I need to make some custom faceplates). It also flashes an LED every time it gets a RF transmission.

Here's a quick video of it in action:

The one thing I'm not crazy about is that the maximum resolution you can get from cloudwatch is stats per minute, so the meters don't actually change as often as I would like.

Still, pretty cool. I'm looking forward to building some more displays like this in the future.

Thursday, July 29, 2010

Extending Hive with Custom UDTFs

Let’s take a look at the canonical word count example in Hive: given a table of documents, create a table containing each word and the number of times it appears across all documents.

Here’s one implementation from the Facebook engineers:

CREATE TABLE docs(contents STRING);

FROM (

MAP docs.contents

USING 'tokenizer_script'

AS

word,

cnt

FROM docs

CLUSTER BY word

) map_output

REDUCE map_output.word, map_output.cnt

USING 'count_script'

AS

word,

cnt

;

In this example, the heavy lifting is being done by calling out to two scripts, ‘tokenizer_script’ and ‘count_script’, that provide custom mapper logic and reducer logic.

Hive 0.5 adds User Defined Table-Generating Functions (UDTF), which offers another option for inserting custom mapper logic. (Reducer logic can be plugged in via a User Defined Aggregation Function, the subject of a future post.) From a user perspective, UDTFs are similar to User Defined Functions except they can produce an arbitrary number of output rows for each input row. For example, the built-in UDTF “explode(array A)” converts a single row of input containing an array into multiple rows of output, each containing one of the elements of A.

So, let’s implement a UDTF that does the same thing as the ‘tokenizer_script’ in the word count example. Basically, we want to convert a document string into multiple rows with the format (word STRING, cnt INT), where the count will always be one.

The Tokenizer UDTF

To start, we extend the org.apache.hadoop.hive.ql.udf.generic.GenericUDTF class. (There is no plain UDTF class.) We need to implement three methods: initialize, process, and close. To emit output, we call the forward method.

Adding a name and description:

@description(name = "tokenize", value = "_FUNC_(doc) - emits (token, 1) for each token in the input document")

public class TokenizerUDTF extends GenericUDTF {

You can add a UDTF name and description using a @description annotation. These will be available on the Hive console via the show functions and describe function tokenize commands.

The initialize method:

public StructObjectInspector initialize(ObjectInspector[] args) throws UDFArgumentException

This method will be called exactly once per instance. In addition to performing any custom initialization logic you may need, it is responsible for verifying the input types and specifying the output types.

Hive uses a system of ObjectInspectors to both describe types and to convert Objects into more specific types. For our tokenizer, we want a single String as input, so we’ll check that the input ObjectInspector[] array contains a single PrimitiveObjectInspector of the STRING category. If anything is wrong, we throw a UDFArgumentException with a suitable error message.

if (args.length != 1) {

throw new UDFArgumentException("tokenize() takes exactly one argument");

}

if (args[0].getCategory() != ObjectInspector.Category.PRIMITIVE

&& ((PrimitiveObjectInspector) args[0]).getPrimitiveCategory() != PrimitiveObjectInspector.PrimitiveCategory.STRING) {

throw new UDFArgumentException("tokenize() takes a string as a parameter");

}

We can actually use this object inspector to convert inputs into Strings in our process method. This is less important for primitive types, but it can be handy for more complex objects. So, assuming stringOI is an instance variable,

stringOI = (PrimitiveObjectInspector) args[0];

Similarly, we want our process method to return an Object[] array containing a String and an Integer, so we’ll return a StandardStructObjectInspector containing a JavaStringObjectInspector and a JavaIntObjectInspector. We’ll also supply names for these output columns, but they’re not really relevant at runtime since the user will supply his or her own aliases.

List fieldNames = new ArrayList(2);

List fieldOIs = new ArrayList(2);

fieldNames.add("word");

fieldNames.add("cnt");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldOIs.add(PrimitiveObjectInspectorFactory.javaIntObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);

}

The process method:

public void process(Object[] record) throws HiveException

This method is where the heavy lifting occurs. This gets called for each row of the input. The first task is to convert the input into a single String containing the document to process:

String document = (String) stringOI.getPrimitiveJavaObject(record[0]);

We can now implement our custom logic:

if (document == null) {

return;

}

String[] tokens = document.split(“\\s+”);

for (String token : tokens) {

forward(new Object[] { token, Integer.valueOf(1) });

}

}

The close method:

public void close() throws HiveException { }

This method allows us to do any post-processing cleanup. Note that the output stream has already been closed at this point, so this method cannot emit more rows by calling forward. In our case, there’s nothing to do here.

Packaging and use:

We deploy our TokenizeUDTF exactly like a UDF. We deploy the jar file to our Hive machine and enter the following in the console:

> add jar TokenizeUDTF.jar ;

> create temporary function tokenize as ’com.bizo.hive.udtf.TokenizeUDTF’ ;

> select tokenize(contents) as (word, cnt) from docs ;

This gives us the intermediate mapped data, ready to be reduced by a custom UDAF.

The code for this example is available in this gist.

Friday, June 25, 2010

Come work at Bizo

We're looking to hire a junior developer and a quantitative engineer.

Send us your resume! Or if you know someone in the Bay Area that you think might be a good fit, please forward the posting to them.

Tuesday, June 8, 2010

Accessing Bizo API using Ruby OAuth

Among other things, the interface provides search term suggestions based on business title classification, using the Classify operation of the Bizo API.

I chose to use Ruby and the lightweight Sinatra web framework for fast prototyping and since the Bizo API uses OAuth for authentication, I reached out for the excellent OAuth gem.

Now, while the documentation is good it took me a little time to grok the OAuth API and figure out how to use it. The Bizo API does not use a RequestToken; instead we use an API key and a shared secret. Since the OAuth gem documentation didn't include any example for this use-case, I figured I'd post my code here as a starting point for other people to reuse.

Without further ado, here's the short code fragment:

If you're curious, here's the JSON response for the "VP of Market..." title classification,

require 'rubygems'

require 'oauth'

require 'oauth/consumer'

require 'json'

key = 'xxxxxxxx'

secret = 'yyyyyyyy'

consumer = OAuth::Consumer.new(key, secret, {

:site => "http://api.bizographics.com",

:scheme => :query_string,

:http_method => :get

})

title = "VP of Marketing"

path = URI.escape("/v1/classify.json?api_key=#{key}&title=#{title}")

response = consumer.request(:get, path)

# Display response

p JSON.parse(response.body)

{

"usage" => 1,

"bizographics" => {

"group" => { "name" => "High Net Worth", "code" => "high_net_worth" },

"functional_area" => [

{"name" => "Sales", "code" => "sales" },

{"name" => "Marketing", "code" => "marketing" }

],

"seniority" => {"name" => "Executives", "code" => "executive" }

}

}

Hopefully this is useful to Rubyists out there needing quick OAuth integration using HTTP GET and a query string and don't need to go through the token exchange process.

Tuesday, May 11, 2010

Hackday: dependency searching using scala, jersey, gxp, mongodb

I borrowed the main layout from the SpringSource Enterprise Bundle Repository. I'm pretty happy with the results:

And the detail view:

There's also a browse view.

I've been really happy using scala and jersey, and I wanted something simple and easy for this project, so I thought it was worth a shot. After adding GXP for templating support, I have to say the combination of scala/jersey/GXP makes a pretty compelling framework for simple web apps.

As an example, here's the beginning of my 'Browse' Controller:

@Path("/b")

class Browse {

val db = new RepoDB

@Path("/o")

@GET @Produces(Array("text/html"))

def browseOrg() = browseOrgLetter("A")

@Path("/o/{letter}")

@GET @Produces(Array("text/html"))

def browseOrgLetter(@PathParam("letter") letter : String) = {

val orgs = db.getOrgLetters

val results = db.findByOrgLetter(letter, 30)

BrowseView.getGxpClosure("Organization", "o", orgs, letter, results)

}

...

It's using nested paths, so /b/o is the main browse by organization page, /b/o/G would be all organizations starting with 'G'.

Then, I have a simple MessageBodyWriter that can render a GxpClosure:

@Provider

@Produces(Array("text/html"))

class GxpClosureWriter extends MessageBodyWriter[GxpClosure] {

val context = new GxpContext(Locale.US)

override def isWriteable(dataType: java.lang.Class[_], ...) = {

classOf[GxpClosure].isAssignableFrom(dataType)

}

override def writeTo(gxp: GxpClosure, ...) {

val out = new java.io.OutputStreamWriter(_out)

gxp.write(out, context)

}

...

And, that's really all there is to it. Nice, simple, and lightweight.

Last but not least, mongodb. It was probably overkill for this project, but I was looking for an excuse to play with it some more. I use it to store and index all of the repository information. I have a separate crawler process that lists everything in our repository s3 bucket, then stores an entry for each artifact. As part of this, it does some basic tokenizing of the organization and artifact names for searching. Searching like this was a little disappointing compared to lucene. Overall though, I'm pretty happy with it. Browsing and searching are both ridiculously fast. Like I said, it was probably overkill for the amount of data we have.... but it can never be too fast. speed is most definitely a feature.

Anyway, that's the wrap-up.

I'd be interested to other thoughts/experiences on mongodb from anyone out there.

Wednesday, May 5, 2010

Improving Global Application Performance, continued: GSLB with EC2

CloudFront is a great CDN to consider, especially if you're already an Amazon Web Services customer. Unfortunately, it can only be used for static content; the loading of dynamic content will still be slower for far-away users than for nearby ones. Simply put, users in India will still see a half-second delay when loading the dynamic portions of your US-based website. And a half-second delay has a measurable impact on revenue.

Let's talk about speeding up dynamic content, globally.

The typical EC2 implementation comprises instances deployed in a single region. Such a deployment may span several availability zones for redundancy, but all instances are in roughly the same place, geographically.

This is fine for EC2-hosted apps with nominal revenue or a highly localized user base. But what if your users are spread around the globe? The problem can't be solved by moving your application to another region - that would simply shift the extra latency to another group.

For a distributed audience, you need a distributed infrastructure. But you can't simply launch servers around the world and expect traffic to reach them. Enter Global Server Load Balancing (GSLB).

A primer on GSLB

Broadly, GSLB is used to intelligently distribute traffic across multiple datacenters based on some set of rules.

With GSLB, your traffic distribution can go from this:

To this:

GSLB can be implemented as a feature of a physical device (including certain high-end load balancers) or as a part of a DNS service. Since we EC2 users are clearly not interested in hardware, our focus is on the latter: DNS-based GSLB.

Standard DNS behavior is for an authoritative nameserver to, given queries for a certain record, always return the same result. A DNS-based implementation of GSLB would alter this behavior so that queries return context-dependent results.

Example:

User A queries DNS for gslb.example.com -- response: 10.1.0.1

User B queries DNS for gslb.example.com -- response: 10.2.0.1

But what context should we use? Since our goal is to reduce wire latency, we should route users to the closest datacenter. IP blocks can be mapped geographically -- by examining a requestor's IP address, a GSLB service can return a geo-targeted response.

With geo-targeted DNS, our example would be:

User A (in China) queries DNS for geo.example.com -- response: 10.1.0.1

User B (in Spain) queries DNS for geo.example.com -- response: 10.2.0.1

Getting started

At a high level, implementation can be broken down into two steps

1) Deploying infrastructure in other AWS regions

2) Configuring GSLB-capable DNS

Infrastructure configurations will vary from shop to shop, but as an example, a read-heavy EC2 application with a single master database for writes should:

- deploy application servers to all regions

- deploy read-only (slave) database servers and/or read caches to all regions

- configure application servers to use the slave database servers and/or read caches in their region for reads

- configure application servers to use the single master in the "main" region for writes

This is what such an environment would look like:

When configuring servers to communicate across regions (app servers -> master DB; slave DBs -> master DB), you will need to use IP-based rules for your security groups; traffic from the "app-servers" security group you set up in eu-west-1 is indistinguishable from other traffic to your DB server in us-east-1. This is because cross-region communication is done using external IP addresses. Your best bet is to either automate security group updates or use Elastic IPs.

Note on more complex configurations: distributed backends are hard (see Brewer's [CAP] theorem). Multi-region EC2 environments are much easier to implement if your application tolerates the use of 1) regional caches for reads; 2) centralized writes. If you have a choice, stick with the simpler route.

As for configuring DNS, several companies have DNS-based GSLB service offerings:

- Dynect - Traffic Management (A records only) and CDN Manager (CNAMEs allowed)

- Akamai - Global Traffic Management

- UltraDNS - Directional DNS

- Comwired/DNS.com - Location Geo-Targeting

DNS configuration should be pretty similar for the vendors listed above. Basic steps are:

1) set up regional CNAMEs (us-east-1.example.com, us-west-1.example.com, eu-west-1.example.com, ap-southeast-1.example.com)

2) set up a GSLB-enabled "master" CNAME (www.example.com)

3) define the GSLB rules:

- For users in Asia, return ap-southeast-1.example.com

- For users in Europe, return eu-west-1.example.com

- For users in Western US, return us-west-1.example.com

- ...

- For all other users, return us-east-1.example.com

If your application is already live, consider abstracting the DNS records by one layer: geo.example.com (master record); us-east-1.geo.example.com, us-west-1.geo.example.com, etc. (regional records). Bring the new configuration live by pointing www.example.com (CNAME) to geo.example.com.

Bizo's experiences

Several of our EC2 applications serve embedded content for customer websites, so it's critical we minimize load times. Here's the difference we saw on one app after expanding into new regions (from us-east-1 to us-east-1, us-west-1, and eu-west-1) and implementing GSLB (load times provided by BrowserMob):

Load times before GSLB:

Load times after GSLB:

Reduced load times for everyone far from us-east-1. Users are happy, customers are happy, we're happy. Overall, a success.

It's interesting to see how the load is distributed throughout the day. Here's one application's HTTP traffic, broken down by region (ELB stats graphed by cloudviz):

Note that the use of Elastic Load Balancers and Auto Scaling becomes much more compelling with GSLB. By geographically partitioning users, peak hours are much more localized. This results in a wider difference between peak and trough demand per region; Auto Scaling adjusts capacity transparently, reducing the marginal cost of expanding your infrastructure to multiple AWS regions.

For our GSLB DNS service, we use Dynect and couldn't be more pleased. Intuitive management interface, responsive and helpful support, friendly, no-BS sales. Pricing is based on number of GSLB-enabled domains and DNS query rate. Contact Dynect sales if you want specifics (we work with Josh Delisle and Kyle York - great guys). Note that those intending to use GSLB with Elastic Load Balancers will need the CDN Management service.

Closing remarks

Previously, operating a global infrastructure required significant overhead. This is where AWS really shines. Amazon now has four regions spread across three continents, and there's minimal overhead to distribute your platform across all of them. You just need to add a layer to route users to the closest one.

The use of Amazon CloudFront in conjunction with a global EC2 infrastructure is a killer combo for improving application performance. And with Amazon continually expanding with new AWS regions, it's only going to get better.

@mikebabineau

Friday, April 2, 2010

GWT MVP Tables

Wednesday, March 24, 2010

Bizo Job - Designer

Position: Web / UI / UX Designer (San Francisco)

Summary:

We’re looking for an out-of-the-box thinker with a good sense-of-humor and a great attitude to join our product development team. As the first in-house Web / UI / UX Designer for Bizo, you will take responsibility for developing easy, powerful, consistent and high velocity web and interaction designs across all Bizo web products as well as marketing materials related to the Bizographic Targeting Platform, a revolutionary new way to target business advertising online. You will be a key player on an incredible team as we build our world-beating, game-changing, and massively scalable bizographic advertising and targeting platform. In a nutshell, you will be the voice of reason in all design and usability aspects of Bizo.

The Team:

We’re a small team of very talented people (if we do say so ourselves!). We use Agile development methodologies. We care about high quality results, not how many hours you’re in the office. We believe in strong design that helps people get stuff done!

The Ideal Candidate:

- Self-motivated

- Entrepreneurial / Hacker spirit

- Experience/Expertise with Adobe Illustrator (and/or similar design tools)

- Strong CSS skills

- Strong HTML skills

- Strong Javascript skills

- Understands the value of mock-ups (points for Balsamiq experience)

- Flash experience (bonus but not required)

- Enjoys working on teams

- Educational background or industry experience in Design or related field – points for advanced degrees

- Gets stuff done!

Please send a resume, cover letter and link to online portfolio to: donnie@bizo.com

Wednesday, March 17, 2010

Introducing Cloudviz

Friday, March 5, 2010

SSH to EC2 instance ID

I often find myself looking up EC2 nodes by instance ID so I can grab the external DNS name and SSH in. Fed up with the extra “ec2-describe-instance , copy, paste” layer, I threw together a function (basically a fancy alias) to SSH into an EC2 instance referenced by ID.

Assuming you’re on Mac OS X / Linux, just put this somewhere in ~/.profile, reload your terminal, and you’re good to go.

Alternatively, you can use the shell script version.

(note: cross-posted here)

Thursday, March 4, 2010

Example git/git-sh config

First, you should start with git-sh. It adds some bash shell customizations like a nice `PS1` prompt, tab completion, and incredibly short git-specific aliases. I'll cover some of the aliases later, but this is the thing that started me down the "how cool can I get my git environment" path.

Example Shell Session

A lot of my customizations are around aliases, so this is a quick overview, and then the aliases are defined/explained below.

Here is a made up example bash session with some of the commands:

# show we're in a basic java/whatever project

$ ls

src/ tests/

# start git-sh to get into a git-specific bash environment

$ git sh

# change some things

$ echo "file1" > src/package1/file1

$ echo "file2" > src/package2/file2

$ echo "file3" > src/package3/file2

# see all of our changes

$ d

# runs: git diff

# see only the changes in package1

$ dg package1

# runs: git diff src/package1/file1

# stage any path with 'package' in it

$ ag package

# runs: git add src/package1/file1 src/package2/file2 src/package3/file3

# we only wanted package1, reset package2 and package3

$ rsg package2

# runs: git reset src/package2/file2

$ rsg package3

# runs: git reset src/package3/file3

# see what we have staged now (only package1)

$ p

# runs: git diff --cached

# commit it

$ commit -m "Changed stuff in package1"

# runs: git commit -m "..."

That is the basic idea.

Most of the magic is from the [alias] section of .gitconfig, along with my .gitshrc allowing the git prefix to be dropped.

.gitconfig

The .gitconfig file is in your home directory and is for user-wide settings.

Here is my current .gitconfig with comments:

[user]

name = Stephen Haberman

email = stephen@exigencecorp.com

[alias]

# 'add all' stages all new+changed+deleted files

aa = !git ls-files -d | xargs -r git rm && git ls-files -m -o --exclude-standard | xargs -r git add

# 'add grep' stages all new+changed that match $1

ag = "!sh -c 'git ls-files -m -o --exclude-standard | grep $1 | xargs -r git add' -"

# 'checkout grep' checkouts any files that match $1

cg = "!sh -c 'git ls-files -m | grep $1 | xargs -r git checkout' -"

# 'diff grep' diffs any files that match $1

dg = "!sh -c 'git ls-files -m | grep $1 | xargs -r git diff' -"

# 'patch grep' diff --cached any files that match $1

pg = "!sh -c 'git ls-files -m | grep $1 | xargs -r git diff --cached' -"

# 'remove grep' remove any files that match $1

rmg = "!sh -c 'git ls-files -d | grep $1 | xargs -r git rm' -"

# 'reset grep' reset any files that match $1

rsg = "!sh -c 'git ls-files -c | grep $1 | xargs -r git reset' -"

# nice log output

lg = log --graph --pretty=oneline --abbrev-commit --decorate

# rerun svn show-ignore -> exclude

si = !git svn show-ignore > .git/info/exclude

# start git-sh

sh = !git-sh

[color]

# turn on color

diff = auto

status = auto

branch = auto

interactive = auto

ui = auto

[color "branch"]

# good looking colors i copy/pasted from somewhere

current = green bold

local = green

remote = red bold

[color "diff"]

# good looking colors i copy/pasted from somewhere

meta = yellow bold

frag = magenta bold

old = red bold

new = green bold

[color "status"]

# good looking colors i copy/pasted from somewhere

added = green bold

changed = yellow bold

untracked = red

[color "sh"]

branch = yellow

[core]

excludesfile = /home/stephen/.gitignore

# two-space tabs

pager = less -FXRS -x2

[push]

# 'git push' should only do the current branch, not all

default = current

[branch]

# always setup 'git pull' to rebase instead of merge

autosetuprebase = always

[diff]

renames = copies

mnemonicprefix = true

[svn]

# push empty directory removals back to svn at directory deletes

rmdir = true

.gitshrc

This is my .gitshrc file, heavily based off Ryan Tomayko's original.

Ryan's original comments are prefixed with #, I'll prefix my additions with ###, most of which are aliases to my [alias] entries above and some git-svn aliases.

#!/bin/bash

# rtomayko's ~/.gitshrc file

### With additions from stephenh

# git commit

gitalias commit='git commit --verbose'

gitalias amend='git commit --verbose --amend'

gitalias ci='git commit --verbose'

gitalias ca='git commit --verbose --all'

gitalias n='git commit --verbose --amend'

# git branch and remote

gitalias b='git branch -av' ### Added -av parameter

gitalias rv='git remote -v'

# git add

gitalias a='git add'

gitalias au='git add --update'

gitalias ap='git add --patch'

### Added entries for my .gitconfig aliases

alias aa='git aa' # add all updated/new/deleted

alias ag='git ag' # add with grep

alias agp='git agp' # add with grep -p

alias cg='git cg' # checkout with grep

alias dg='git dg' # diff with grep

alias pg='git pg' # patch with grep

alias rsg='git rsg' # reset with grep

alias rmg='git rmg' # remove with grep

# git checkout

gitalias c='git checkout'

# git fetch

gitalias f='git fetch'

# basic interactive rebase of last 10 commits

gitalias r='git rebase --interactive HEAD~10'

alias cont='git rebase --continue'

# git diff

gitalias d='git diff'

gitalias p='git diff --cached' # mnemonic: "patch"

# git ls-files

### Added o to list other files that aren't ignored

gitalias o='git ls-files -o --exclude-standard' # "other"

# git status

alias s='git status'

# git log

gitalias L='git log'

# gitalias l='git log --graph --pretty=oneline --abbrev-commit --decorate'

gitalias l="git log --graph --pretty=format:'%Cred%h%Creset -%C(yellow)%d%Creset %s %Cgreen(%cr)%Creset' --abbrev-commit --date=relative"

gitalias ll='git log --pretty=oneline --abbrev-commit --max-count=15'

# misc

gitalias pick='git cherry-pick'

# experimental

gitalias mirror='git reset --hard'

gitalias stage='git add'

gitalias unstage='git reset HEAD'

gitalias pop='git reset --soft HEAD^'

gitalias review='git log -p --max-count=1'

### Added git svn asliases

gitalias si='git si' # update svn ignore > exclude

gitalias sr='git svn rebase'

gitalias sp='git svn dcommit'

gitalias sf='git svn fetch'

### Added call to git-wtf tool

gitalias wtf='git-wtf'

Since I defined most of the interesting aliases in the .gitconfig [alias] section, it means they're all usable via git xxx, e.g. git ag foo, but listing alias ag='git ag' in .gitshrc means you can also just use ag foo, assuming you've started the git-sh environment.

It results in some duplication, but means they're usable from both inside and outside of git-sh, which I think is useful.